IPBench: Evaluating Large Language Models in Intellectual Property Applications

🌐 Homepage | 🤗 Dataset Download | 📂 GitHub Repository

Why Do We Need a Dedicated AI Benchmark for Intellectual Property?

In critical IP service scenarios—such as patent examination, technology novelty searches, and legal consultations—the accuracy of domain expertise and compliance with legal frameworks are paramount. While large language models (LLMs) excel in general tasks, they often struggle with specialized IP challenges like claim interpretation or technical feature analysis.

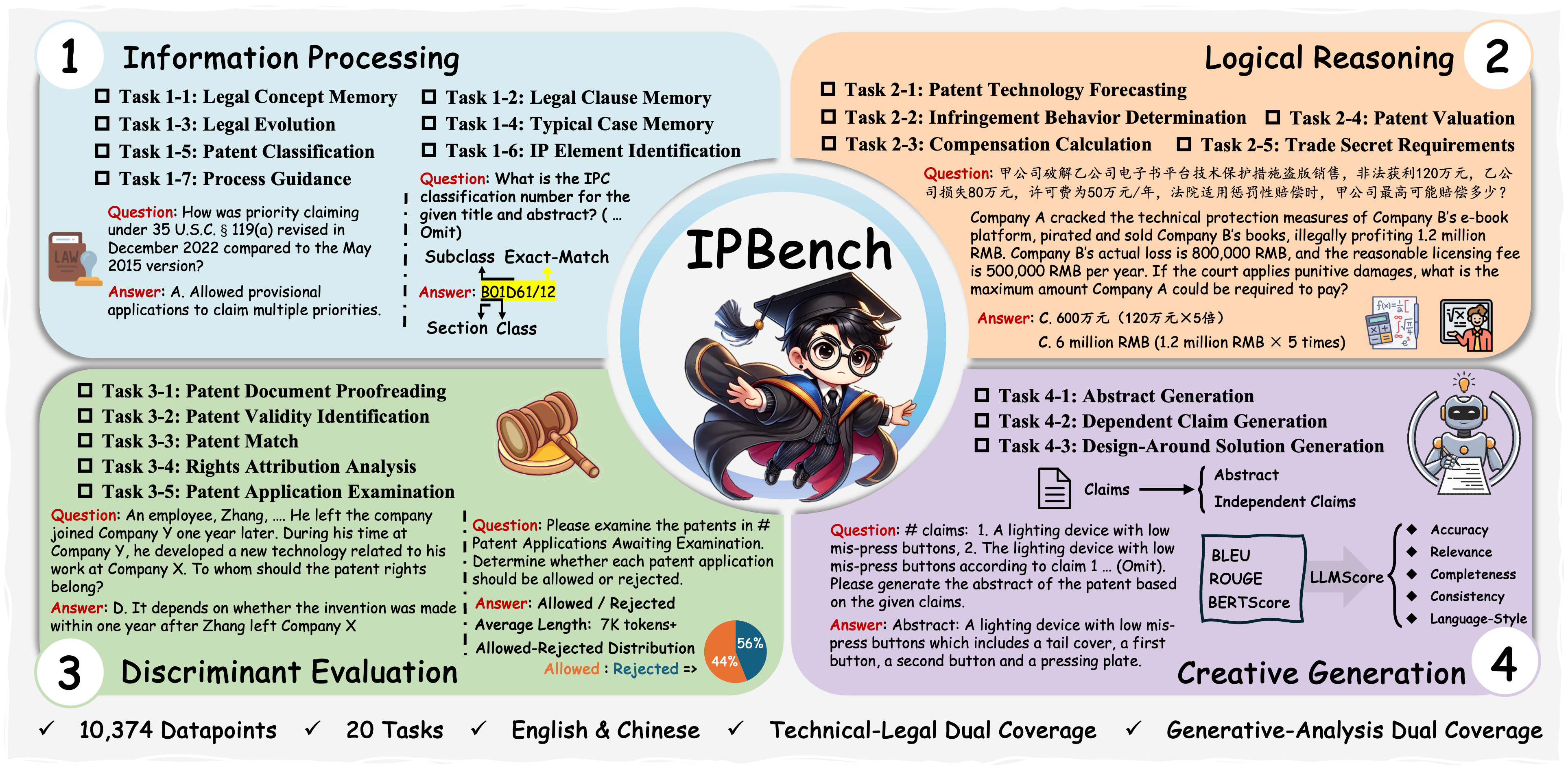

The IPBench research team addresses this gap through a four-tier evaluation framework based on Webb’s Depth of Knowledge (DOK) theory:

-

Information Processing: Extracting key elements from patent documents -

Logical Reasoning: Defining the scope of patent claims -

Discriminant Evaluation: Analyzing technical novelty and infringement risks -

Creative Generation: Drafting technical disclosure documents

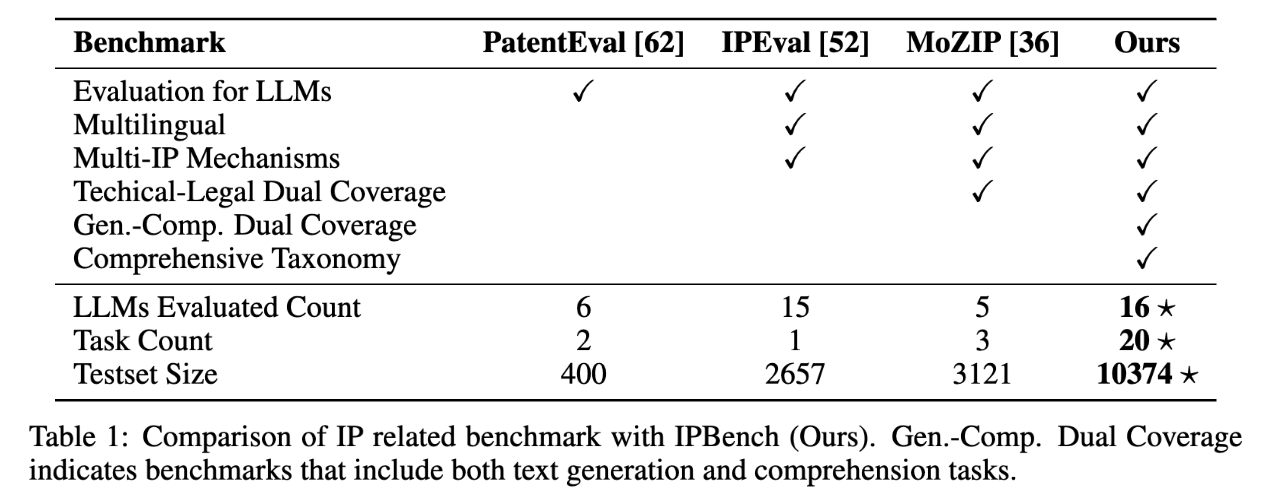

With 10,374 bilingual test cases aligned with U.S. and Chinese legal systems, this benchmark systematically evaluates 20 core capabilities across 8 IP mechanisms.

Three Technical Breakthroughs

1. Multidimensional Task Architecture

IPBench pioneers three advancements over traditional legal benchmarks:

-

Cross-Modal Processing: Supports text, claims, and technical drawings -

Dynamic Knowledge Updates: Includes latest patent cases (2020-2025) -

Regional Legal Adaptation: Bilingual testing for U.S. and Chinese laws

2. Real-World Task Simulation

Examples from end-to-end IP workflows:

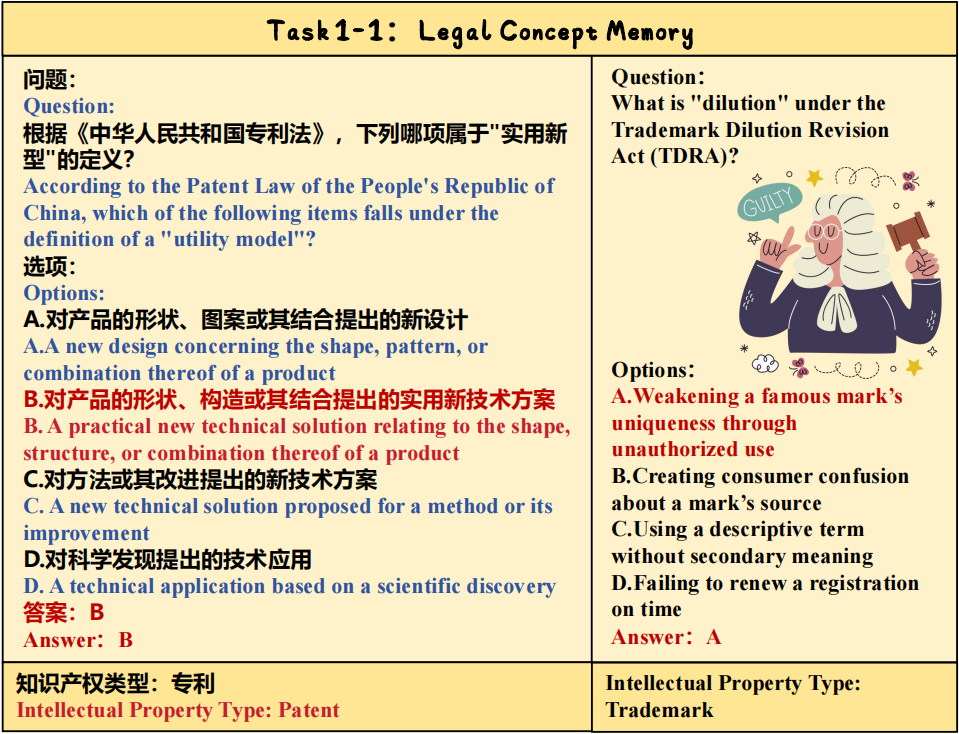

Task 1-1: Technical feature extraction from patent specifications

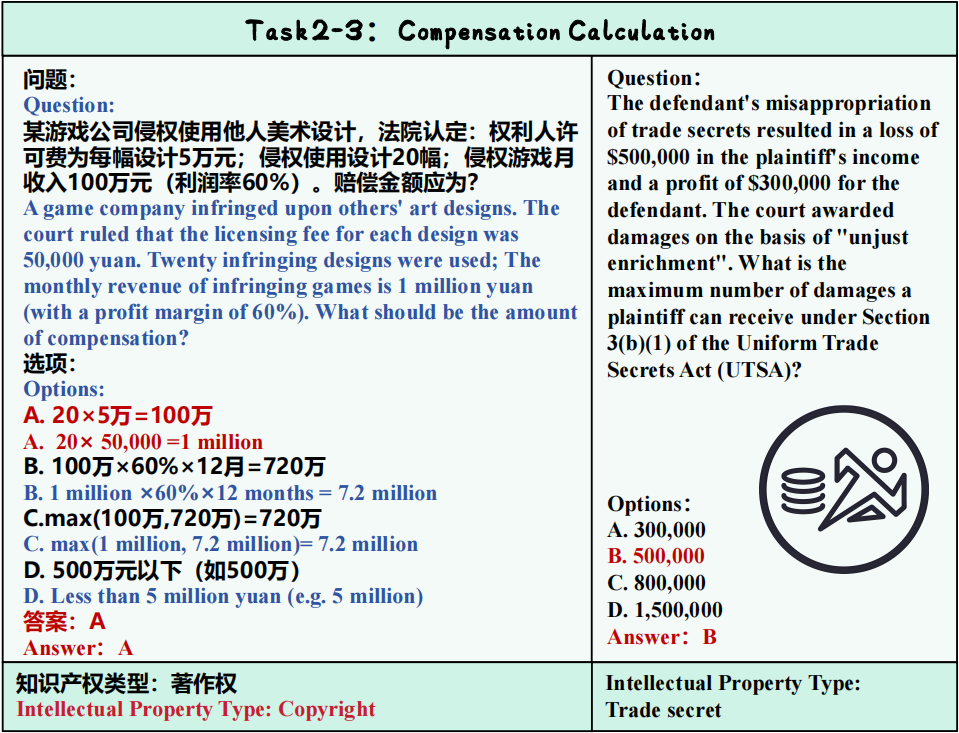

Task 2-3: Semantic conflict detection in claim charts

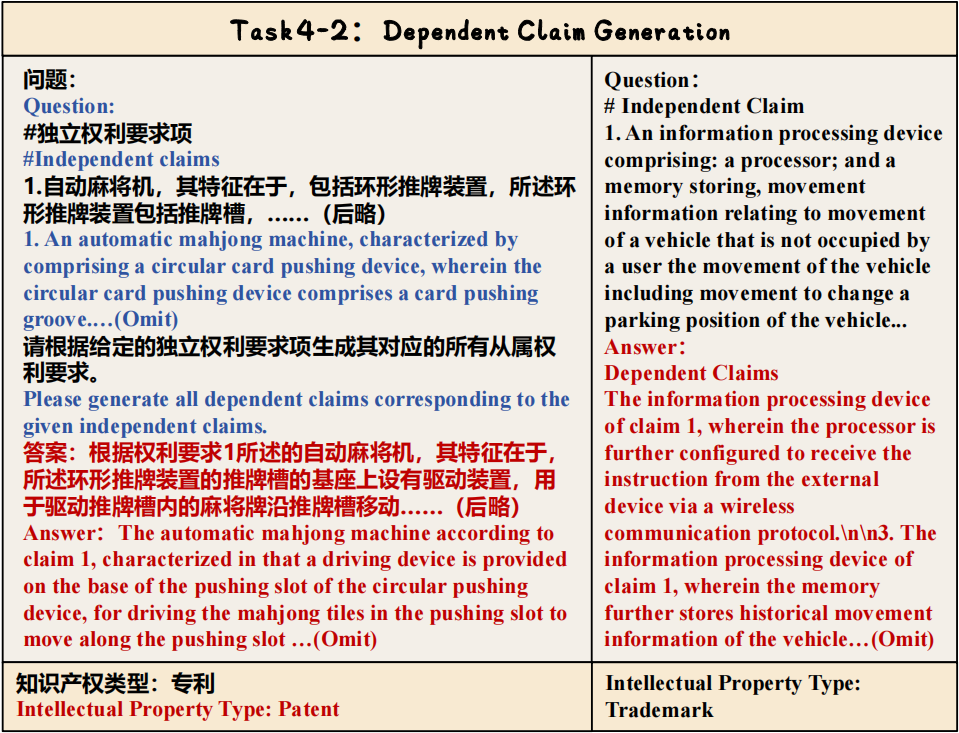

Task 4-2: Automated generation of technical disclosures

3. Granular Error Analysis

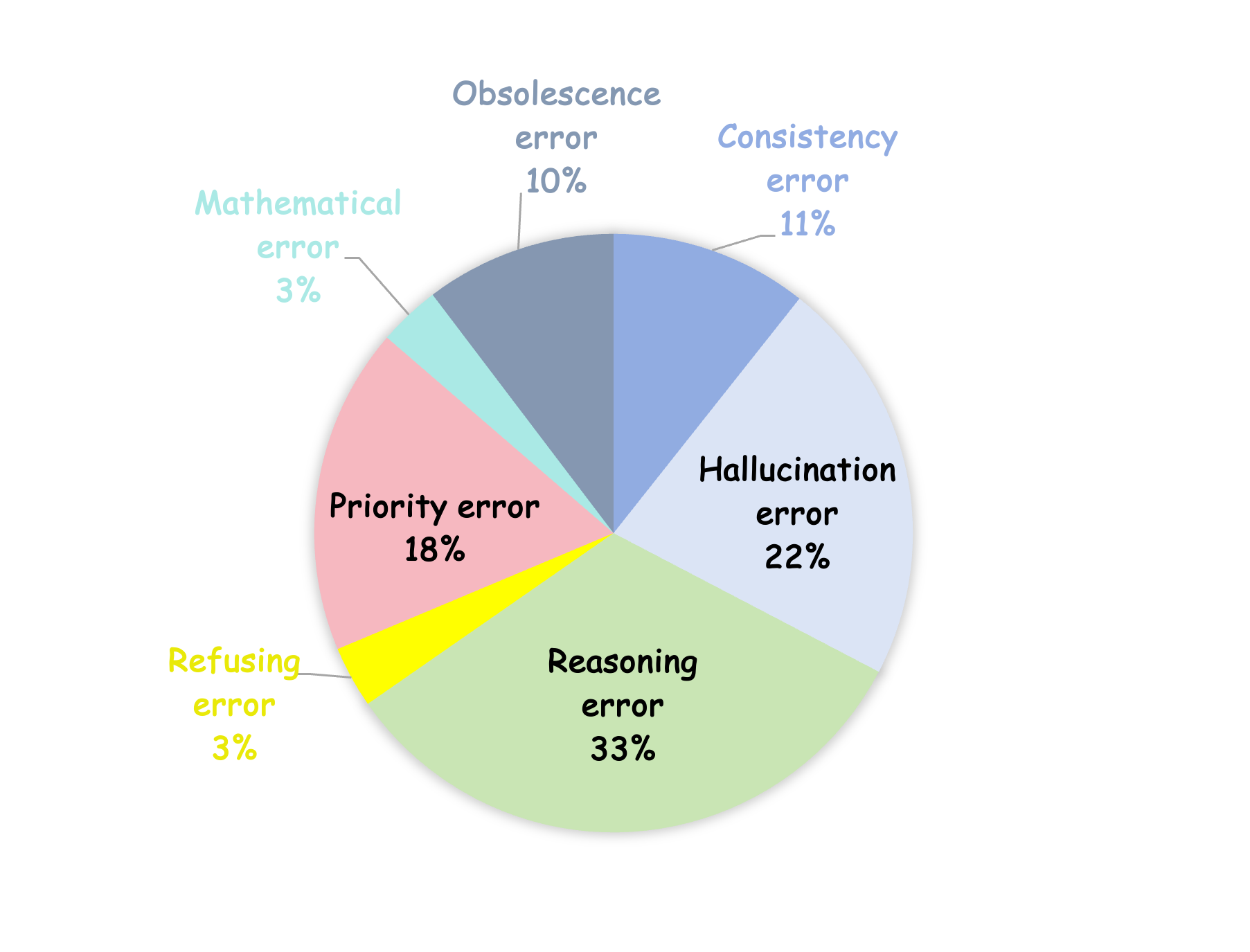

A study of 300 GPT-4o error cases identified 7 failure patterns:

-

Reasoning Errors (33%): Misjudging technical causation -

Obsolescence Errors (22%): Ignoring 2024 patent law amendments -

Feature Confusion (18%): Mistaking “graphene coating” for “carbon nanotube structures”

Getting Started with IPBench

Environment Setup

# Install dependencies

pip install -r requirements.txt

Model Inference

Three testing modes supported:

# Zero-shot inference

sh inference.sh

# API testing (OpenAI-compatible)

sh inference-api.sh

# Chain-of-Thought prompting

sh inference-cot.sh

Evaluation

# Multiple-choice evaluation

sh eval-mcqa.sh

# Classification tasks (3-5 classes)

sh eval-3-5.sh

# Generation quality assessment

sh eval-generation.sh

Key Findings from Experiments

1. Domain-Specific Tuning Matters

General-purpose LLMs achieved only 54.3% accuracy, while models fine-tuned with patent data reached 78.6%.

2. Legal Jurisdiction Gaps Exist

In “Prior Use Defense” tasks, accuracy for Chinese laws (82%) surpassed U.S. compliance (67%), reflecting training data biases.

3. Multi-Step Reasoning Remains Challenging

Even GPT-4o scored just 61.2% on “Inventiveness Analysis” tasks using Chain-of-Thought prompting.

4. Generation Quality Varies Widely

For technical disclosure drafting:

-

Format compliance: 89/100 -

Technical accuracy: 42/100

Practical Recommendations for Developers

Data Enhancement Strategies

-

Integrate WIPO PATENTSCOPE’s million-scale patent database -

Build “Technical Feature-Legal Clause” knowledge graphs -

Include claim amendment histories

Model Optimization Pathways

-

Develop legal version control modules -

Embed IPC classification encoders -

Design technical similarity layers

Application Scenarios

-

Smart Patent Search: Precise prior art matching -

Infringement Risk Alerts: Automated technical feature analysis -

Examination Assistants: Drafting office action templates

Future Roadmap

The team plans to:

-

Expand to EU/Japanese legal systems (2025 Q4) -

Add image-based claim parsing (2026 Q1) -

Release open-source tuning framework IP-Tuner (2026 Q2)

Resources

-

Full Dataset: Hugging Face Hub -

Technical White Paper: IPBench Documentation -

Collaboration: wangqiyao@mail.dlut.edu.cn

Related Reads:

Semantic Structure of Patent Claims

U.S.-China Patent Law Comparison

Automated Disclosure Drafting Guide