The New Era of Interface Understanding: When AI Truly “Sees” Screens

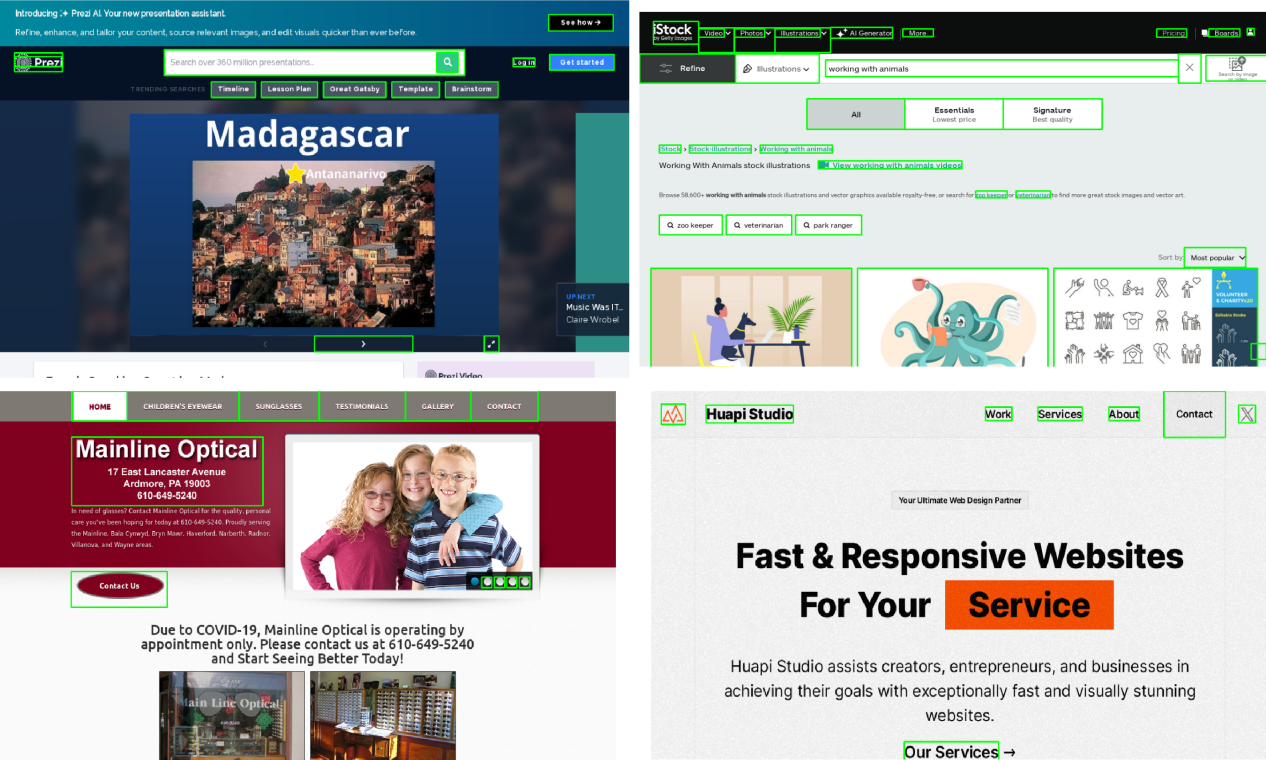

Traditional automation solutions rely on HTML parsing or system APIs to interact with user interfaces. Microsoft Research’s open-source OmniParser project introduces a groundbreaking vision-based approach – analyzing screenshots to precisely identify interactive elements and comprehend their functions. This innovation boosted GPT-4V’s operation accuracy by 40% in WindowsAgentArena benchmarks, marking the dawn of visual intelligence in interface automation.

Technical Breakthrough: Dual-Engine Architecture

1. Data-Driven Learning Framework

-

「67,000+ Annotated UI Components」

Sampled from 100K popular webpages in ClueWeb dataset, covering 20 common controls like buttons, input fields, and menus through DOM tree extraction. -

「7,000+ Semantic Descriptions」

Functional annotations such as “blue circular search button” and “date picker with dropdown arrow” establish precise visual-semantic mappings.

2. Collaborative Model Architecture

-

「YOLOv8-Optimized Detection Model」

Achieves 91.3% accuracy on <32px icons after fine-tuning, 23% improvement over baseline YOLO. -

「BLIP-2 Enhanced Description Model」

Combines visual encoder with language decoder, scoring 0.78 BLEU-4 for semantic descriptions (35% higher than predecessors).

# Typical Implementation

from omniparser import Detector, Describer

detector = Detector("weights/icon_detect")

describer = Describer("weights/icon_caption_florence")

screenshot = load_image("ui_screen.png")

boxes = detector.predict(screenshot) # Get interactive regions

descriptions = describer.generate(boxes) # Generate semantic labels

Performance Validation: Benchmark Dominance

| Benchmark | Comparison Baseline | Accuracy Gain | Input Type |

|---|---|---|---|

| ScreenSpot | Raw GPT-4V | +40% | Visual-only |

| Mind2Web | HTML-Assisted Solution | +28% | No Source Code |

| AITW (Android) | System View Hierarchy | +33% | Cross-Platform |

「Core Advantages」 in WindowsAgentArena evaluation:

-

79.5% success rate in form completion -

68.2% accuracy in multi-step operations -

3.8x faster cross-application execution

Enterprise-Grade Solutions

1. Automated Testing Revolution

-

「Component Responsiveness Check」

Auto-detect inactive buttons/malfunctioning fields -

「Cross-Platform Validation」

Unified detection for Web/Windows/Android interfaces -

「Visual Regression Testing」

Pixel-level comparison for UI anomalies

2. Accessibility Breakthroughs

-

62% faster voice control response -

<200ms dynamic interface narration -

Real-time descriptions in 16 languages

3. Industry Deployment Cases

-

「E-commerce Platform」

Reduced checkout testing from 8 hours to 23 minutes -

「Financial Institution」

Improved compliance coverage from 78% to 99.6% -

「Government Portal」

Cut accessibility costs by 84%

Open-Source Ecosystem

1. Modular Architecture

-

「Pre-trained Models」

Available on HuggingFace (Detection/Description models) -

「API Middleware」

RESTful integration support -

「Extensible Plugins」

Compatible with Phi-3.5-V, Llama-3.2-V, etc.

2. Developer Resources

-

Interactive Demo: Gradio-based testing platform -

Comprehensive Documentation: 23 practical use cases -

Community Tools: Local logging, multi-agent orchestration

Roadmap: Future Developments

-

「2024 Q4」: iOS/macOS support -

「2025 Q1」: Mobile-optimized inference engine -

「2025 Q3」: Multimodal instruction understanding -

「2026 Q1」: Self-evolving training framework

Getting Started: 3-Step Implementation

-

Clone Repository git clone https://github.com/microsoft/OmniParser -

Install Dependencies pip install -r requirements.txt -

Launch Demo python gradio_demo.py # Start visual interface

Conclusion: The Future of Human-Computer Interaction

OmniParser represents more than technical innovation – it’s a paradigm shift in interface interaction. By enabling machines to understand screens like humans, this open-source solution breaks through the limitations of scripted automation into cognitive intelligence. From developer tools to accessibility services, the vision parsing revolution is just beginning.

「Explore Further」: